- Home

- About AALHE

- Board of Directors

- Committees

- Guiding Documents

- Legal Information

- Organizational Chart

- Our Institutional Partners

- Membership Benefits

- Member Spotlight

- Contact Us

- Member Home

- Symposium

- Annual Conference

- Resources

- Publications

- Donate

EMERGING DIALOGUES IN ASSESSMENTAssessment of Interprofessional Education: Towards a Critical Mass

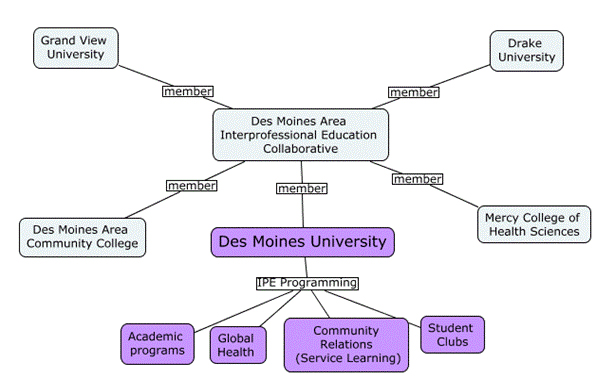

December 21, 2017 Michelle Rogers, Ph.D. In this article, we discuss the challenges of assessing interprofessional education (IPE) and the approach one institution is taking to offer quality IPE experiences and measure effectiveness. Dr. Rogers works as an assessment specialist at the institution that is highlighted in this article. Dr. Turbow provides the context for IPE and assessment based on research in this area. Interprofessional education (IPE) is an institutional learning objective common to many schools of health professions. IPE occurs when “students from two or more professions learn about, from and with each other to enable effective collaboration and improve health outcomes” (World Health Organization [WHO], 2010). In recent years, there has been a tremendous focus on advancing quality methods of assessing interprofessional education (Blue, Chesluk, Conforti, & Holmboe, 2015; Luftiyya, Brandt, & Cerra, 2016). According to Blue et al. (2015), although multiple stakeholders have a vested interest in assessing IPE, the assessment of these competencies needs further development. Institutional Context Des Moines University (DMU) is a graduate health sciences university made up of eight degree programs. Six of the programs have specialized accrediting agencies that require interprofessional education. While some accrediting bodies simply require that programs integrate interprofessional experiences into the curriculum, others have detailed IPE competencies outlining what students should be able to do by the time they graduate. For example, the American Association of Colleges for Osteopathic Medicine (AACOM) has a published set of competencies for interprofessional collaboration (AACOM, 2012). Regardless of how prescriptive the competencies or standards are, academic programs must still grapple with the question of how to integrate developmentally-appropriate interprofessional learning experiences and evaluate student learning outcomes given the resources available and institutional structures. Figure 1 illustrates some of DMU’s pre-clinical IPE structure and resources. Under ideal conditions, the structure facilitates cross-pollination across academic programs. Several of the curricular IPE experiences in which students participate are offered through the Des Moines Area IPE Collaborative. The Collaborative is a consortium of local health science institutions that work together to develop IPE programming aligned to the Interprofessional Education Collaborative (IPEC) domains: Roles and Responsibilities, Interprofessional Communication, Teams and Teamwork, Values and Ethics (Blue et al., 2015). Co-curricular events offered through non-academic departments also serve as resources to support student development of IPE competencies. For example, at DMU, Community Relations sponsors an annual back-to-school physicals event. Facilitators structure the interprofessional experience so that students from multiple programs are able to learn from, with, and about each other’s professional roles as they administer supervised physical exams. Students are asked to complete a survey, with open-ended questions, to briefly describe what they learned from that experience. Research shows that sponsoring community events, such as health fairs and blood drives, are prime ways to support meaningful collaboration between students in different health science programs; portfolios may present an additional way to assess these co-curricular experiences, allowing students to document their learning over time (Turbow & Chaconas, 2016). Planning with the end in mind DMU aims to create and integrate learning activities that are accessible to students from diverse professional roles and backgrounds. In her role, Dr. Rogers has assisted faculty in designing and assessing experiences for students who have only one or two years of coursework. In reflecting on those instructional design experiences, Dr. Rogers noticed that working with faculty to formulate reasonably attainable learning objectives can be challenging. This challenge is partly due to uncertainty about students’ previous exposure to IPE, teamwork and their medical experience up until this point. It is also due to the difficulty in balancing curricular time with the depth and breadth of the desired learning outcomes. A one-time, 90-minute experience is not likely to produce comparable outcomes to a week-long medical mission trip. Even a month-long experience requires the faculty to prioritize competencies to develop and assess. Hence, as faculty integrate experiences into the program curriculum or design new learning opportunities, it is important to establish realistic expectations for student learning and align assessment processes with professional competencies, learning objectives, and experiences. Formative evaluation Formative evaluation plays a critical role in the ability to gather feedback on the effectiveness of the IPE activity. This evaluation should include information about the effectiveness of the curriculum as well as actual student learning outcomes. During the implementation of the IPE experience, Dr. Rogers often works alongside facilitators taking note of things that went well and not so well. Student feedback is often included in this evaluation. Some of the prompts students were asked to complete are included below:

Dr. Rogers and colleagues triangulate data to evaluate the effectiveness of the experience for the students who participated and work together to make improvements. How can we track achievement of IPE outcomes? Given the large number and variety of students who participate in DMU co-curricular programming and the IPE collaborative, individual facilitators are not typically expected to track each student’s achievement of the intended learning. After all, some of the students may attend an event from outside of their own program or institution. Regardless, the facilitators may assess group performance. The authors will briefly discuss two strategies that may be leveraged to evaluate team performance. The first strategy involves the team documenting their ideas and sharing them via a “gallery walk” on a large white note sheet so that facilitators and students can all see the ideas generated. This process enables facilitators to quickly assess the quality of the responses and determine if they aligned with the learning objectives. A similar strategy involves having one student in each group document notes from their team discussion and email them to the instructor. The instructor then compiles this information to identify themes and shares them with the whole group the following class period. The instructor provides feedback to the class by identifying strengths and missed opportunities. To evaluate individual performance, program faculty might assign students to write a reflection paper, graded using a rubric containing performance criteria that are aligned to competency models and stated learning objectives so that when grades are submitted, it’s clear how students performed relative to the competencies assessed for the experience. The use of multiple raters may assist with examining consistency in grading. While this article highlighted some strategies for addressing challenges in IPE assessment, these are not the only ways. We hope that our article will encourage further dialogue as to how achievement of IPE outcomes can be effectively tracked. Also, we are interested in hearing about ways that institutions are intentionally designing learning experiences for their students. How are other institutions tracking individual and group achievement during the clinical phases of the program? How can we best engage administrators in assessing and evaluating IPE? References American Association of Colleges for Osteopathic Medicine. (2012, August). Osteopathic Core Competencies for Medical Students. Blue, A., Chesluk, B., Conforti, L., Holmboe, E. (2015, Summer). Assessment and evaluation in interprofessional education: Exploring the field. Journal of Allied Health, 44(2), 73-82. Lutfiyya, M.N., Brandt, B., & Cerra, F. (2016, Jun). Reflections from the intersection of health professions education and clinical practice: The state of the science of interprofessional education and collaborative practice. Academic Medicine, 91(6), 766-771. Turbow, D. J., & Chaconas, E. (2016). Piloting Co‐Curricular ePortfolios. Assessment Update, 28(4), 5-7. World Health Organization (WHO). 2010. Framework for action on interprofessional education & collaborative practice. Geneva, Switzerland: WHO. http://apps.who.int/iris/bitstream/10665/70185/1/WHO_HRH_HPN_10.3_eng.pdf?ua=1 |