- Home

- About AALHE

- Board of Directors

- Committees

- Guiding Documents

- Legal Information

- Organizational Chart

- Our Institutional Partners

- Membership Benefits

- Member Spotlight

- Contact Us

- Member Home

- Symposium

- Annual Conference

- Resources

- Publications

- Donate

EMERGING DIALOGUES IN ASSESSMENTUsing A Validated Creativity Scale to Assess and Inform Revisions in a Creative Writing

March 30, 2023 Kevin Whiteacre, Professor, Department of Criminal Justice, University of Indianapolis

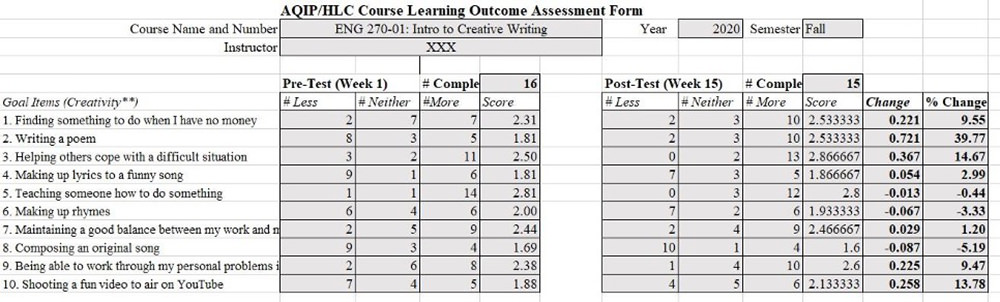

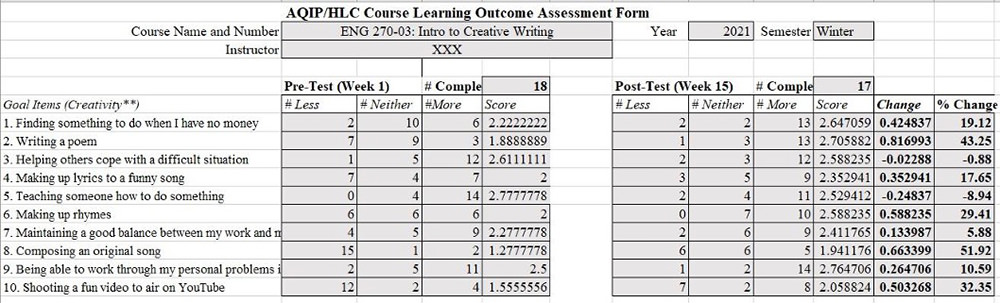

Course Assessing course learning outcomes is important for reflection, improvement, accountability, and accreditation, but it can be time-consuming and difficult to create valid, efficient, and practical learning outcome measures. Faculty, staff, and administrators who already feel stretched thin may view assessment as demanding too much time and resources (Kramer, 2008; Suskie, 2018; Macdonald, et al., 2014). The time demands can be especially onerous for part-time faculty who now comprise over 40 percent of college instructional staff (American Association of University Professors, 2020). Pre-existing, validated psychometric scales gleaned from the relevant research literature can provide quick, valid, and easy-to-use learning outcome measures that inform reflection and revision. This paper describes our experience adopting items from Kaufman’s (2012) Domains of Creativity Scale (K-DOCS) to measure learning outcomes for a general education creative writing course that also meets the university-wide learning goal of creativity. The Assessment Project English 270: Introduction to Creative Writing (ENGL 270) is part of the Creative Writing track within the English major at a small private liberal arts university located in the Midwest. ENGL 270 serves as a prerequisite for the other creative writing courses, fulfills two university General Education requirements–Fine Arts Applied and Fine Arts Theoretical–and it aligns with the university-wide learning goal of Creativity. The Creativity goal establishes that “Students will be able to develop or apply something new, innovative, imaginative or divergent” (University-Wide Learning Goals). This broadly defined university-wide learning outcome, arguably, poses a more difficult outcome to measure than the more task-oriented specific course outcomes, which can be measured more directly through quizzes, as well as writing and portfolio rubrics. The focus for this project was on tapping into the university goal of Creativity and its definition/criteria. The authors explored the literature on creativity, looking for measures that had closed-ended items, established validation in the literature, and relevance to the stated course learning outcomes. We ultimately chose the K-DOCS, which “offers researchers a short, free tool to assess self-perceptions of creative ability” (Kaufman, 2012, p. 304). Specifically, we selected a total of ten items, five from the Performance and five from the Self/Everyday subscales/factors. The wording for each item is provided in Figure 1 below, which is an excerpt from the Fall reporting instrument. The Self/Everyday items are odd-numbered, the Performance items are even-numbered. The directions for responding to the items follow Kaufman (2012): “Compared to people of approximately your age and life experience, how creative would you rate yourself for each of the following acts? For acts that you have not specifically done, estimate your creative potential based on your performance on similar tasks,” with a five-point Likert scale from much less creative to much more creative. These two factors and the selected items related most directly to the course curriculum and outcomes and seemed most relevant to current students’ experiences. We limited ourselves to five items from each factor to keep the instrument manageable and reduce the burden on the students completing the survey. Besides, some of the rejected items (such as “singing in harmony”) bore little relevance to the creative writing class content and would not provide the instructor with much actionable information. We created brief online entry and exit student surveys and administered them to students in week 1 (entry) and week 15 (exit) in both Fall 2020 and Winter 2021. Like all student course surveys, they were voluntary and anonymous. To simplify data collection and reflection, we created a single-page fillable Excel spreadsheet, which included 1) limited fillable fields, with shading demarcating them; 2) formulas to automatically compute averages and items changes between entry and exit surveys; and 3) open boxes at the bottom with reflection prompts for the instructor to briefly consider the results and potential future actions (see Figures 1 and 2 below for excerpts of the reporting instrument). Results Fall 2020 results showed average score improvements (and thus an increase in creative self-efficacy) between the entry and exit surveys for several of the items, such as “writing a poem” (up 40%) and “making up lyrics to a funny song” (up 3%) (see Figure 1 below). The Performance items saw greater improvement than the Self/Everyday items, as one would expect for a creative writing course, further lending validity to the measures. Interestingly, there was no improvement in making up rhymes and composing an original song—in fact there was a slight drop—neither of which were covered in the class. Figure 1. Reporting Instrument Excerpt/Results for Fall 2020 The instructor found the instrument efficient and easy to use, particularly the automated computation of score changes between the entry and exit surveys. She also found the reflection prompt boxes helpful in initiating revisions for the next semester. After reflection on the results, the instructor made changes to the curriculum for the Winter 2021 class, such as adding examples of rhymed poems to the online textbook and offering an extra credit assignment to engage students with songwriting and rhyme. In Winter 2021, results showed greater improvement on the very Performance items reflecting these actions (see Figure 2 below). For example, student responses to “making up rhymes” saw a 10% increase in Winter compared to -3% in Fall, and “composing an original song” increased 25% in the winter versus a -5% drop in the fall. Satisfied with raising students’ self-efficacy in these targeted areas over two terms, the instructor plans to focus on another item, “teaching someone how to do something” (-8%), by framing in-class experiences, like workshop and group activities, in new ways. The survey has now become integral to a cycle of reflection, revision, implementation, and feedback, and the instructor feels more engaged with assessment and its real impact on her courses. Figure 2. Reporting Instrument Excerpt/Results for Winter 2021 Implications This assessment tool, using items from the Performance and Self-Everyday subscales of the Kaufman Domains of Creativity Scale, had a direct impact on the class activities that supported curricular goals and learning outcomes in new ways, prompting reflection and revision, which resulted in students’ perceived growth and effectiveness in the areas addressed. The instructor found the process and recording instrument both simple to use and informative. Soliciting students’ perceptions in entry and exit surveys helped the instructor identify what was effective and areas in the course where students could be better supported, leading to continuous improvement in courses and her professional development. The instructor found this very rewarding. She could concretely measure and see the difference each specific curricular revision made in the outcomes from one class to another, and she continues to use the instrument, tweaking the curriculum each semester, scanning trends over time. A few caveats are worth noting: K-DOCS measures self-reported creative ability, rather than actual creative products, though research has found self-reported creativity can indeed predict creative performance (Furnham et al., 2006). Others may reject this self-report approach, preferring product-based assessment, which involves subject experts rating the level of creativity, often with a Likert-scale based standardized rubric. Such an approach has its own strengths and weaknesses beyond this discussion (Said-Metalwy et al., 2017). In our view, there is no single ideal measure of creativity, but rather a set of concerns and priorities, such as reliability, validity, practicality, usefulness, and more, that must be weighed. For us, the self-report scales chosen best fit our needs for reliable, practical, and efficient measures that can serve as a strong foundation for reflection and revision. We suggest that psychometric instruments such as K-DOCS can provide effective, free, meaningful, validated, actionable measures for adding to your assessment arsenal. References American Association of University Professors (2022, June). The annual report on the economic status of the profession. https://www.aaup.org/file/AAUP_ARES_2021%E2%80% 932022.pdf Furnham, A., Zhang, J., & Chamorro-Premuzic, T. (2006). The relationship between psychometric and self-estimated intelligence, creativity, personality, and academic achievement. Imagination, Cognition and Personality, 25(2), 119-145. Kaufman, J.C. (2012). Counting the muses: Development of the Kaufman Domains of Creativity Scales (K-DOCS). Psychology of Aesthetics, Creativity and the Arts, 6(4), 294-308. Kramer, P. I. (2008). The art of making assessment anti-venom: Injecting assessment in small doses to create a faculty culture of assessment. Presented at The Association for Institutional Research Annual Forum, May 24-28, 2008, Seattle, WA. https://digitalcommons.csbsju.edu/cgi/viewcontent.cgi?article=1014&context=oarca_pus MacDonald, S. K., Williams, L. M. Lazowski, R. A. Horst, S. J. & Barron, K. E. (2014). Faculty attitudes toward general education assessment: A qualitative study about their motivation. Research & Practice in Assessment, 9, 74-90. https://files.eric.ed.gov/fulltext/ EJ1062845.pdf Said-Metwaly, S., Van den Noortgate, W., & Kyndt, E. (2017). Approaches to measuring creativity: A systematic literature review. Creativity!, 4(2), 238-275. Suskie, Linda (2018). Assessing student learning: A common sense guide (3rd edition). Jossey-Bass. |