- Home

- About AALHE

- Board of Directors

- Committees

- Guiding Documents

- Legal Information

- Organizational Chart

- Our Institutional Partners

- Membership Benefits

- Member Spotlight

- Contact Us

- Member Home

- Symposium

- Annual Conference

- Resources

- Publications

- Donate

EMERGING DIALOGUES IN ASSESSMENTExplorations in Assessment in a Radiologic Technology Program

April 24, 2023 Taryn Price-Lopez, MA RT (R)(CT)(MR), DACC- NMSU Introduction The setting for this ongoing exploration of assessment technique is Doña Ana Community College, a part of the New Mexico State University system in Southern New Mexico. Specifically, this occurs in a medical imaging (radiography) program that trains students to become well-informed radiographers (x-ray technologists). Students are engaged in the cohort model, in which 20 to 25 students progress through the Program together. After two intensive years of didactic and clinical instruction, graduating students must apply all the knowledge they’ve gained to pass a national licensing exam. One of the most notorious didactic subjects reportedly dreaded by the average radiography student is the subject of radiation physics. Radiation physics requires learners to understand electronic circuit components that help produce x-rays and the behavior of electrons due to various atomic interactions. Most students explain that their anxiety toward the subject is a lack of foundational knowledge combined with the complexity of the content. Unfortunately, I have seen students struggle to engage successfully in the course year after year. In other words, traditional assessments usually reveal poor comprehension and may prevent a student from persisting within the program. Traditional assessment approach Throughout the life of this course, instruction has followed a traditional approach; the instructor provided a lecture period, some formative assessment, and a high-stakes exam; and then repeated the process throughout the semester. And this was not working for this complex subject. Not only is it incompatible with the content, but it needs to incorporate more active learning. Additionally, as mental health concerns become more and more commonplace to consider, the high-stakes exam makes less sense to this instructor. So, why not change it? Why not provide segmented, low-stakes assessments that:

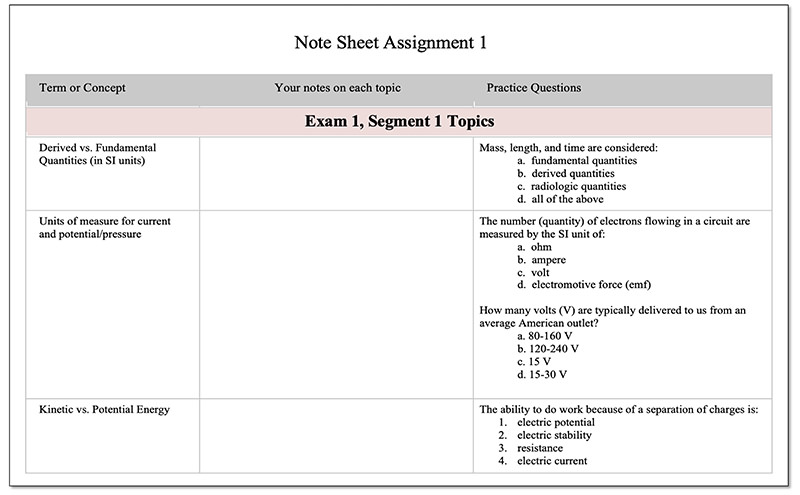

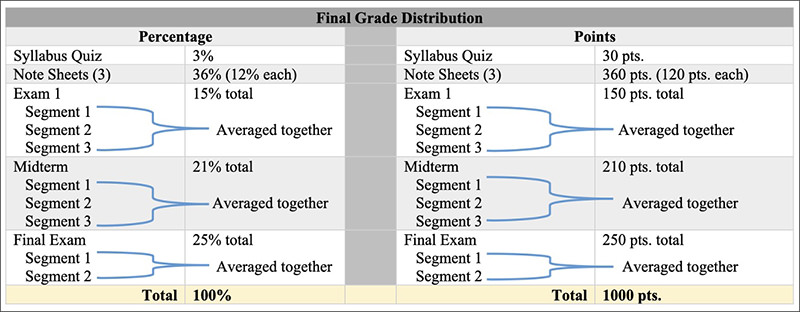

I could not find a reason not to try. New assessment approach First, students were assigned weeks-long Note Sheet Assignments (NSA) (Figure 1) in a hybrid radiographic physics course. These NSAs were due for a course grade (see the course grading scheme below [Figure 2] and serve as a study guide for module exam segments. The NSAs are each formatted as a three-column table. The first column is dedicated to a main concept/topic with a row for each Module. Each Module covers approximately five chapters of a physics textbook— as recommended by the textbook authors. In the second column of the NSA, students are asked to write notes in their own words and summaries for each topic; resources for how to summarize are provided. The third column includes practice questions for application and practice of topics. Figure 1: Excerpt from an NSA.

Figure 2

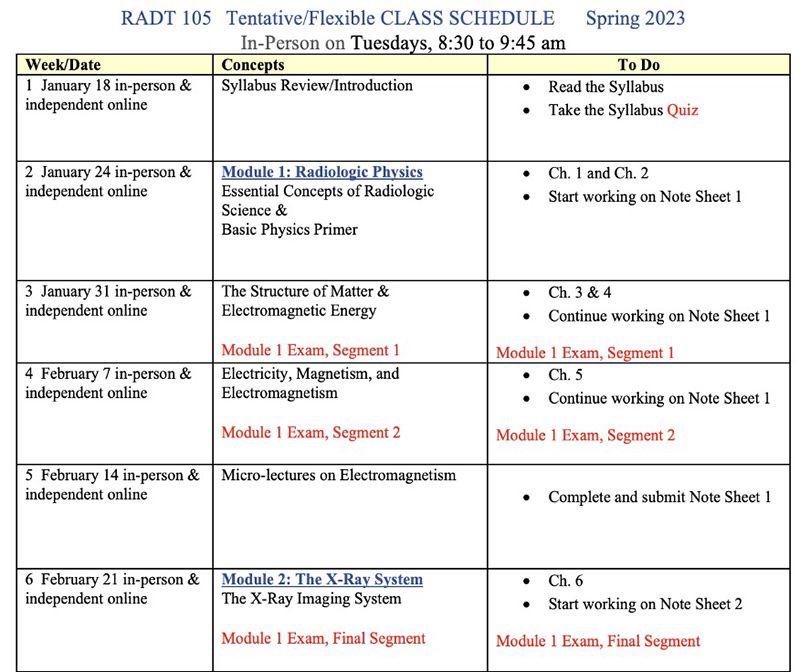

As students move through the NSAs, aligned module exam segments are given during the in-person sessions of the hybrid class (please see a syllabus schedule outline in Figure 3). Figure 3: Syllabus schedule excerpt demonstrating the spacing of module exam segments.

New assessment approach Thinking about ways to incorporate equity work into assessment work can start with identifying the campus community members involved in assessment work. Institutions across the nation are doing innovative and inclusive work that reimagines processes, reorients objectives, and redistributes power. Efforts like the Grand Challenges in Assessment Project are bringing assessment and equity professionals together across institutions (Singer-Freeman & Robinson, 2020). It is clear that good equity work begets good equity work, and as more assessment folks engage in an equitable approach to assessment, more examples of ways campuses can engage in equitable assessment will be made available and improved upon. The local steps towards justice are often the easiest to imagine but the hardest to take. Hopefully some of the examples described herein will encourage readers to start, continue, and share their own journeys. Indeed, the field of equitable assessment will be driven by the assessment professionals who, today, are experimenting, revising, and implementing tomorrow’s good practices. Intentions for this assessment approach This approach was designed with several intentions. First, I designed to help students with mental or emotional anxiety toward test taking (Krispenz et al., 2019) by offering frequent lower-stakes testing situations that still result in a high-stakes grade. If students are having a mentally or emotionally “off week,” for example, during one segment, they will still have other opportunities to improve their high-stakes grade with time to seek support from the teacher. This approach also requires students to take tests more often, which implies that they are practicing test-taking more often. Second, the design encourages students to avoid the “cram” study approach to exams (UNC, n.d.). Instead, they are required to space their study time out (a best practice in studying), in preparation for each exam segment. Third, the design provides appropriately spaced scaffolding (van de Pol et al., 2010) by asking students to read the text, listen to lectures and class discussions, explain topics through note-taking, and practice applying their learning, before testing. Finally, it encourages students to take notes, a best practice in active learning (Brown et al., 2014), by assigning them as a grade through the NSAs. Implications of this approach At the time of writing this article, a cohort of 25 second-program-semester students had taken three segments for the Module 1 Exam and three segments for the Midterm Exam. Their scores were compared with the 2019 cohort of 21 second-program-semester students. The 2019 cohort was appropriate because it was the most recent year that in-person tests were administered in the radiographic physics course. Students in the 2020 and 2021 cohorts engaged in 100% online content. For the Module 1 Exam, in-person test scores increased from approximately 80% average to 85% average. Additionally, students were surveyed (using the Canvas Quiz tool) on the exam segment approach. All students preferred the new approach to the traditional exam approach. One student explained multiple times that “it’s the best way I’ve ever had to take tests” (B. Eggleston, personal communication, 2023). For the Midterm Exam, which included the historically most challenging content concerning electric x-ray circuits, in-person test scores increased from approximately 77% average to 83% average. While this is not quite statistically significant, it is an improvement. The most notable effect of this technique was the improvement in student stress levels. In speaking with students, it was revealed that this method caused much less cognitive load (Seery & Roisin, 2011) and allowed students to engage more fully with each learning and testing experience. While there was still some test anxiety, it was reportedly reduced to a more manageable level that promoted performance. In one instance, the entire class began to talk to me (the instructor and author) freely about the high level of communal anxiety associated with a high-stakes test in another class that was worth nearly half of their course grade. The angst stemmed from the difference in the delivery method reported here and the most traditional process of the other course, with the prior being preferred. Limitations and implications for further exploration For instructors interested in applying this assessment design in their own courses, it is important to consider the context of the course. For example, this model was implemented in a hybrid setting (50% in person, 50% online independent work). Special considerations will be required for 100% online and/or hyflex delivery methods to maintain the academic integrity of testing. Additionally, it was implemented using a textbook and timeframe that allowed just one or two chapters to be the focus of attention each week. Other factors may include the types of assessments typically used in the discipline of focus; for this example, my science/health based course does not rely on essays or papers for assessment, and instead involves exams to demonstrate cognitive outcomes. In addition, instructors should design the course to assess learning at multiple points throughout the semester (e.g., assessing on content again through a midterm and/or final exam) to avoid encouraging short-term memory over long-term memory (Sotola & Crede, 2020). Yet another alternative may be to require fill-in-the-blank questions on exam segments instead of multiple choice questions (Scully, 2017). Conclusion Allied health program faculty at Doña Ana Community College are excited about the direction this new approach is taking and are optimistic about it having a positive impact on student learning and mental health. As the landscape of higher education changes and evolves, innovations in assessment are needed. References Brown, P. C., Roediger, H. L., III, & McDaniel, M. A. (2014). Make it stick: The science of successful learning. Belknap Press. Krispenz, A., Gort, C., Schültke, L., & Dickhäuser, O. (2019, August 20). How to reduce test anxiety and academic procrastination through inquiry of cognitive appraisals: A pilot study investigating the role of academic self-efficacy. Frontiers in Psychology, 19(1917), 1-14. Nilson, L. B., & Goodson, L. A. (2018). Online teaching at its best: Merging instructional design with teaching and learning research. Jossey-Bass. Scully, D. (2017). Constructing multiple-choice items to measure higher-order thinking. Practical Assessment, Research, and Evaluation, 22(4). https://doi.org/10.7275/swgt-rj52 Seery, M. K., & Donnelly, R. (2011). The implementation of pre-lecture resources to reduce in-class cognitive load: A case study for higher education chemistry. British Journal of Educational Technology, 1-11. Sotola, L. K., & Crede, M. (2021). Regarding class quizzes: A meta-analytic synthesis of studies on the relationship between frequent low-stakes testing and class performance. Educational Psychology Review, 33, 407-426. University of North Carolina (UNC). (n.d.). Studying 101: Study smarter not harder. https://learningcenter.unc.edu/tips-and-tools/studying-101-study-smarter-not-harder/ van de Pol, J., Volman, M., Beishuizen, J. (2010, September). Scaffolding in teacher-student interaction: A decade of research. Educational Psychology Review, 22(3). |