- Home

- About AALHE

- Board of Directors

- Committees

- Guiding Documents

- Legal Information

- Organizational Chart

- Our Institutional Partners

- Membership Benefits

- Member Spotlight

- Contact Us

- Member Home

- Symposium

- Annual Conference

- Resources

- Publications

- Donate

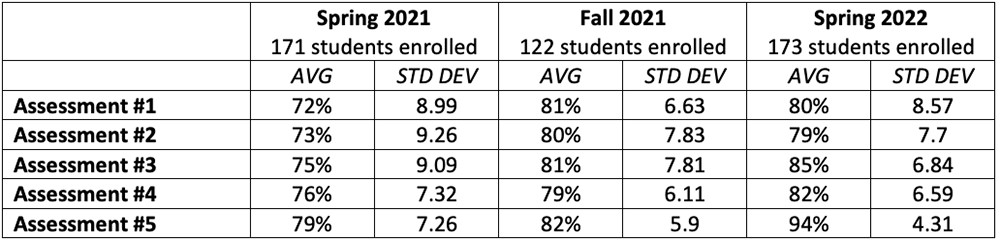

EMERGING DIALOGUES IN ASSESSMENTBenefits of Aligning Low and High-Stakes Assessment and the use of Feedback in Rubrics to Improve Student Learning Outcomes June 14, 2023 Melanie Harris, MS, University of North Carolina at Charlotte At the University of North Carolina at Charlotte, all pre-nursing students are required to take Fundamentals of Microbiology [BIOL2259]. Fundamentals of Microbiology is a large-enrollment course taught in spring and fall terms. This course is taught with a student-centered approach that includes ten weekly class activities requiring instructor review and feedback. In addition, there are five high-stakes assessments consisting primarily of free response questions. However, the number of students precludes much time being spent providing consistent and high-quality feedback. In Spring 2021, there were 171 students enrolled while in Fall 2021, there were 122 students enrolled. These students must earn a B or better in this course to apply for acceptance into the Nursing program. In reality, a grade of A is needed to be competitive. While the circumstances were not typical [fully online due to the pandemic], the Spring 2021 term was a typical group of students in terms of performance on assessments. During that term, the high-stakes assessment averages ranged from 72 to 79. While these averages were not bad, I felt that students would show improvement in the assessment grades if they received better explanations of why they were missing items in the weekly assignments that prepared them for the high stakes assessments. In other words, I expected to see learning improvement if I had a mechanism to provide better feedback. The tool I chose came in the form of Gradescope (gradescope.com). In the Fall 2021 term, I implemented Gradescope to increase learning and success rates in my course. This revised assessment continued into the Spring 2022 term. The course was taught in a hybrid format in both Fall 2021 and Spring 2022. Spring 2021 data is included as a means of comparison. Gradescope is a tool that can be used to administer and grade all course activities. It integrates with learning management systems such as Canvas so grades can be easily uploaded. While Canvas does have the option to use rubrics and provide feedback, the rubrics can only be created for assignments, not quizzes. Providing feedback on Canvas quizzes must be done on an individual basis. One benefit of using Gradescope is that the rubric allows for creation of individualized feedback statements that can be saved and easily chosen while grading a particular question. Within Gradescope, students cannot see the rubric nor any feedback statements until the grades are posted. Therefore, I did not create a rubric until I started grading. As I built the rubric, I added appropriate feedback statements based on the student responses. Once the grading was complete, the grades were published in Gradescope and uploaded to Canvas. Students receive an email notification when grades are available. Since the feedback resides in Gradescope, students must view their graded assignment within Gradescope to see the feedback. Most weeks, students had a class activity that had to be completed and submitted. Some of the assignments were newly created while others were recycled from previous semesters. In addition to using rubrics to assess and provide feedback, I created new assignments designed to help students practice problem-solving skills that are part of the high-stakes assessments. Utilizing rubrics within Gradescope brought some positive results. Table 1 shows the assessment averages and standard deviations for the high stakes assessments for three semesters. The Spring 2021 semester did not involve the use of Gradescope while the Fall 2021 and Spring 2022 semesters did. Table 1: The assessment averages and standard deviations for the high stakes assessments for three semesters.

It is worth noting that the assessment averages improved while the standard deviations decreased. This demonstrates the learning improvement I had hoped to see. While it has been shown that providing appropriate feedback can reduce outcome gaps between marginalized and majority groups of students, it was not possible in this case to make a correlation between students who consistently did well and those who consistently viewed the feedback on weekly activities in Gradescope (Cohen et al., 1999). For instance, there were students in both the Fall 2021 and Spring 2022 semesters who reviewed the feedback weekly but did not perform particularly well on the assessments. At the same time, there were students who did not view the feedback one time and still did very well. Through the first two semesters using Gradescope, I learned a few important lessons. One, the time invested in learning how to use Gradescope [setting up assignments, setting up a rubric, etc.] was significant. And it still takes time to grade 100+ assignments, but using Gradescope does make it easier and faster. Second is the limitation that comes with using a third-party tool to provide feedback. The feedback resides in Gradescope, not the learning management system. This can be improved by using rubrics and providing feedback in the LMS itself, when possible, which may facilitate student use of the feedback. Third, and just as important, is to ensure that students review the feedback. In the future, I can ask students to reflect on whether the feedback received was useful. Alternatively, students can be asked to resubmit an assignment that includes an explanation of how feedback was incorporated in changes made. My best advice to others is to stress the benefit in reviewing the feedback given and using it to improve learning. One last benefit that comes with using online rubrics such as Gradescope or those in learning management systems is the data analytics. Spending time reviewing data analysis helps an instructor identify learning gaps. With more thoughtful activity design and continued improvement in the feedback given, I feel certain gains in learning improvement will be seen. References Cohen, G. L., Steele, C. M., & Ross, L. D. (1999). The Mentor’s Dilemma: Providing Critical Feedback Across the Racial Divide. Personality and Social Psychology Bulletin, 25(10), 1302-1318. https://doi.org/10.1177/0146167299258011 Gregory, J. (2014, September). Creating and Using Rubrics. IUPUI Center for Teaching and Learning. https://ctl.iupui.edu/Resources/Assessing-Student-Learning/Creating-and-Using-Rubrics |