- Home

- About AALHE

- Board of Directors

- Committees

- Guiding Documents

- Legal Information

- Organizational Chart

- Our Institutional Partners

- Membership Benefits

- Member Spotlight

- Contact Us

- Member Home

- Symposium

- Annual Conference

- Resources

- Publications

- Donate

EMERGING DIALOGUES IN ASSESSMENTApplying Kirkpatrick’s Evaluation Model in STEM Education: Assessing Learning Outcomes to Meet Industry Demands

September 11, 2025

AbstractThis paper presents a systematic approach to evaluating learning outcomes in a National Science Foundation-funded computational material science (CMS) course designed for first-generation, Hispanic, and low-income students. In response to the growing demand for CMS expertise and the need to prepare students for professional environments, the authors adopted Kirkpatrick’s four-level evaluation model as a guiding framework. The model begins with Level One, focusing on student reactions and overall satisfaction, then advances to Level Two, which assesses knowledge and skill development through both conventional testing and reflective writing. Level Three emphasizes the transfer of learning, evaluated through mock interviews and faculty observations, while Level Four examines the long-term impact of the program on employment outcomes. By applying this model, the study tackles the complexity of measuring multifaceted competencies in STEM education. Concurrently, it provides a clear structure for supporting continuous improvement. Ultimately, this approach offers educators a practical and evidence-based method for aligning assessment practices with academic standards as well as industry expectations. BackgroundThere is a growing demand for computational material science (CMS) as it is an important linking-subject between engineering, science, and technology (US Department of Labor, 2022). To address this demand, a two-year (2024-2026) project funded by the National Science Foundation was designed to encourage the participation of first-generation, Hispanic, and low-income students in the STEM field at West Texas A&M University. The project team found that a large proportion of students in this rural region have extremely limited exposure to this vital subject area, potentially hindering their employability. Central to this NSF-funded project is a pilot course in CMS. To ensure the project develops and delivers a course that is rigorous and enables students to hone industry-based competencies, we embedded workplace-oriented learning outcomes into the CMS curriculum:

Since this hybrid course is workplace-oriented, the project team administers a course feedback survey questionnaire, the End-of-Course Survey, during the last week of the course to assess students' satisfaction levels with course content, usefulness, and delivery. A four-item scale was selected based on DeCastellarnau’s (2018) meta-analysis, asserting that including a neutral point increases the risk of survey satisficing and consequently impacts measurement quality. Therefore, utilizing a four-point scale, students are forced to show preferences. This questionnaire includes items such as the following:

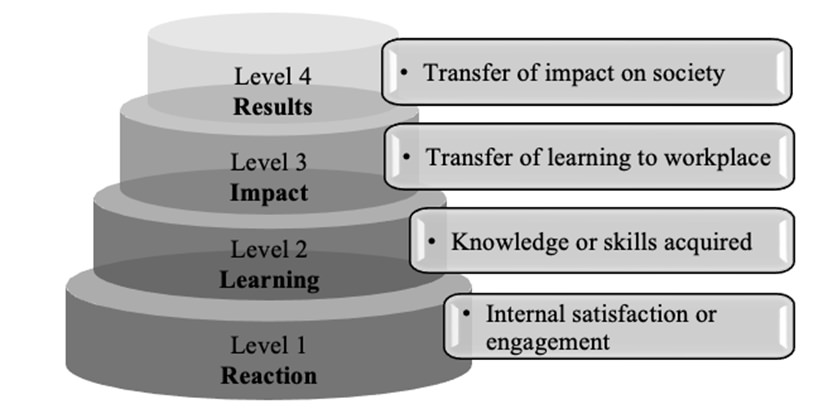

The close-ended item-scale includes– “Strongly Agree,” “Agree,” “Disagree,” and “Strongly Disagree.” As Sui et al. (2018) emphasized, educators have the duty to nurture college students’ employability skills while students’ identities are transitioning from college stages to workplace stages. However, the challenges in assessing student learning outcomes in competency-based STEM courses involve the complexity of conceptualizing and measuring multidimensional competencies, designing authentic and consistent assessment tools, and ensuring validity and fairness (Boud & Soler, 2016; Frank et al., 2014; Hager & Gonczi, 2006; Herman & Cook, 2013; Kane & Wools, 2013). To accomplish this, instructors will use Kirkpatrick’s (1998) four-level evaluation model (see Figure 1) to assess the learning outcomes, ostensibly looking at students’ reactions, learning, behavior, and results. Figure 1: Note. Adapted from Evaluating Training Programs: The four Levels (2nd ed., Ch3), by D. L. Kirkpatrick, 1998, Berrett-Koehler.

Level One Evaluation—ReactionsAccording to Kirkpatrick (1998), the first level evaluations assess learners’ reactions to the educational experiences; in our project we will incorporate questionnaires which are designed to ascertain students’ satisfaction-levels and engagement vis-à-vis the material, and the instructors. Using level one evaluations, the team collects indirect measures from our students. The salient limitation of level one evaluations is that they are indirect. Level one evaluation questionnaires are sometimes referred to as “reaction”, “smile” or “happy” sheets, because they record students’ self-perceptions (Kirkpatrick, 1998, p. 267) Level Two Evaluation—LearningSecond-level evaluations allow us to assess the degree to which learners have obtained the knowledge, skills, and attitudes we intended. So in addition to traditional cognition-based assessment items like quizzes, exams, assignments, and practical demonstrations (a course project) aligning with the outline requirements of the Accreditation Board for Engineering and Technology (ABET), we included two open-ended items on the End-of-Course Survey questionnaire: for the first open-ended item, learners reflect with at least 100 words comparing their course expectations before taking the course to their major takeaways after completing the course; for the second open-ended item, the learners select one specific artifact from the array of course learning materials (including lectures, readings, learning activities, or practical projects) for which they provide at least 100-word reflection on the specific artifact. For assessing the outcomes of level two evaluations, we used the quiz, assignment, exam and demonstration scores. For assessing the reflections, we will use the Griffith University Affective Learning Scale (GUALS) (Rogers et al., 2018) rubric[2]. In earlier research (Nix et al., 2022; Song et al., 2021), affect was determined to be a strong mediator of cognition. The level two evaluation establishes the degree to which the learners achieved the learning outcomes for the course; hence, it also enables our team to become aware of what the learners gained in knowledge from their analyses of their pre-course and post-course perceptions. The reflections completed by the students provide us with evidence for the instructors to use as they reshape the course. Level Three Evaluation—BehaviorsThe level three evaluation activities focus on whether the learners use what they learned when they enter workplaces. Within a higher education context, it is possible to measure this through the learners' internship or project-based learning that indicates the transfer of theory to practice. However, since this CMS course is designed as an introductory graduate course, our internship assessments were not feasible. To achieve the assessment objective, we plan to interview the STEM faculty in the subsequent sequential courses, who are teaching the students that successfully completed the CMS course. The interviews will be one-on-one, semi-structured glean faculty perceptions on CMS-completers’ performance in later coursework. We will also ask faculty perceptions of those who did not take the CMS course. Qualitative content analysis (Mayring 2021) tenets will be used to analyze the data, and keywords will be compared to what the students said about their own learning. Concurrently, our team will use data from the learning management system to track user engagement and participation in the course learning activities from the students (who have already completed the CMS course), by reviewing reports of learner participation records, such as course logins, time on learning activity-tasks for each subsequent, sequential course. We will compare the CMS-course completers to non-CMS course students. Another direct assessment activity for level three evaluations will include mock-interviews. Being An Interviewee – The team will collaborate with the Office of Career and Professional Development (OCPD) to provide mock interviews to students. The CMS instructors will work with local industries to capture their expectations and requirements for new hires, to inform part one of the mock interview - hard skills, and part two - soft skills. After the mid-term exam, all students must plan one-on-one mock interviews with the OCPD. OCPD will submit written post-interview comments—including the OCPC hiring decisions—directly to the grant team. Students will write reflections (also assessed with the GUALS rubric) so that we may compare students’ feelings with OCPD’s evaluative feedback. Being An Interviewer – Students will conduct interviews of at least two potential employers from a list of guest speakers of the course or from a job fair students attended on their own. Subsequently, students will submit career-plan adjustments after the two interviews. The OPCD will evaluate the student-submissions with a rubric they have already constructed; evaluations will be shared with the grant team. Level Four Evaluation—ResultsThe fourth level of assessment looks at the overall effects of the educational program on the larger goals and objectives of the grant. In higher education, this may involve looking at graduation rates, employment rates of graduates, and stories of alumni. However, given that the CMS course is limited in terms of institutional timelines, such as semesters, the assessment approaches as noted above are not appropriate for this project. At this time, level four evaluations are planned but not solidified; we hope to be able to interview students’ supervisor for the students who give permission for us to do so when they graduate. ConclusionCHEA (2017) indicated that accreditation agencies need to understand the broad ranges of evidence reliable and valid evidence of student learning to accreditors. A study from Praslova (2010) has suggested the four-level Kirkpatrick evaluation framework provides a holistic and systematic means to align the specific indicators with the education criteria, such as accreditation requirements and workforce expectations. Although Kirkpatrick's evaluation model was designed to evaluate training programs in business settings, the directions and levels of this model allow educators to measure or evaluate student learning across multiple levels, from immediate reactions to long-term impacts. Moreover, the information generated from this model will generate a structured framework or logic model to evaluate and continually improve the course (Combs et al., 2007). ReferencesBoud, D., & Soler, R. (2016). Sustainable assessment revisited. Assessment and Evaluation in Higher Education, 41(3), 400–413. https://doi.org/10.1080/02602938.2015.1018133 CHEA. (2017). Accreditation and student learning outcomes. Council for Higher Education Accreditation. https://www.chea.org/sites/default/files/pdf/Accreditation%20and%20Student%20Learning%20Outcomes%20-%20Final.pdf Combs, K. L., Gibson, S. K., Hays, J. M., Saly, J., & Wendt, J. T. (2007). Enhancing curriculum and delivery: linking assessment to learning objectives. Assessment & Evaluation in Higher Education, 33(1), 87–102. DOI:10.1080/02602930601122985 DeCastellarnau, A. (2018). A classification of response scale characteristics that affect data quality: a literature review. Quality & Quantity, 52(4), 1523–1559.DOI:10.1007/s11135-017-0533-4 Frank, J. R., Snell, L. S., Cate, O. T., Holmboe, E. S., Carraccio, C., Swing, S. R., … Harris, K. A. (2010). Competency-based medical education: theory to practice. Medical Teacher, 32(8), 638–645. DOI:10.3109/0142159X.2010.501190 Kane, M. T., & Wools, Saskia. (2013). Perspectives on the validity of classroom assessment. In Brookhart, S. M., & McMillan, J. H. (Eds.), Classroom assessment and educational measurement (1st ed., pp. 11-27). DOI:10.4324/9780429507533 Hager, P., & Gonczi, A. (1991). Competency-based standards: a boon for continuing professional education? Studies in Continuing Education, 13(1), 24–40. DOI:10.1080/0158037910130103 Herman, J., & Cook, A. (2013). Fairness is classroom assessment. In Brookhart, S. M., & McMillan, J. H. (Eds.), Classroom assessment and educational measurement (1st ed., pp. 243-265). DOI:10.4324/9780429507533 Kirkpatrick, D. L. (1998). Evaluating training program: The four levels. Berret-Koehler. Mayring, P. (2021). Qualitative content analysis: A step-by-step guide. Sage Nix, J. V., Zhang, M., & Song, L. M. (2022). Co-regulated online learning: Formative assessment as learning. Intersection: A Journal at the Intersection of Assessment & Learning 3(2). DOI:10.61669/001c.36297 Praslova, L. (2010). Adaptation of Kirkpatrick’s model in higher education. Assessment & Evaluation in Higher Education, 35(2), 171-188. DOI:10.1007/s11092-010-9098-7 Rogers, G., Mey, A., Chan, P., Lombard, M., & Miller, F. (2018). Development and validation of the Griffith University Affective Learning Scale (GUALS): A tool for assessing affective learning in health professional students’ reflective journals. MedEdPublish. DIO: 10.15694/MEP.2018.000002.1 Song, L. M., Nix, J. V., & Levy, J. D. (2021). Assessing affective learning outcomes through a meaning-centered curriculum. Proceedings of the Association for Assessment of Learning in Higher Education (AALHE), June 7-11, 2021 Annual Conference, pp. 15-37. Sui, F. M., Chang, J. C., Hsiao, H. C., Chen, S. C., & Chen, D. C. (2018). A Study regarding the gap between the industry and academia expectations for college student’s employability. 2018 IEEE International Conference on Industrial Engineering and Engineering Management (IEEM), 1573–1577. DOI:10.1109/IEEM.2018.8607269 US Department of Labor (2025). What material engineers do. https://www.bls.gov/ooh/architecture-and-engineering/materials-engineers.htm#tab-2 |